Fiddler’s Transformer tab has long been a simple way to examine the use of HTTP compression of web assets, especially as new compression engines (like Zopfli) and compression formats (like Brotli) arose. However, the one-Session-at-a-time design of the Transformer tab means it is cumbersome to use to evaluate the compressibility of an entire page or series of pages.

Introducing Compressibility

Compressibility is a new Fiddler 4 add-on1 which allows you to easily find opportunities for compression savings across your entire site. Each resource dropped on the compressibility tab is recompressed using several compression algorithms and formats, and the resulting file sizes are recorded:

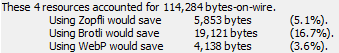

You can select multiple resources to see the aggregate savings:

WebP savings are only computed for PNG and JPEG images; Zopfli savings for PNG files are computed by using the PNGDistill tool rather than just using Zopfli directly. Zopfli is usable by all browsers (as it is only a high-efficiency encoder for Deflate) while WebP is supported only by Chrome and Opera. Brotli is available in Chrome and Firefox, but limited to use from HTTPS origins.

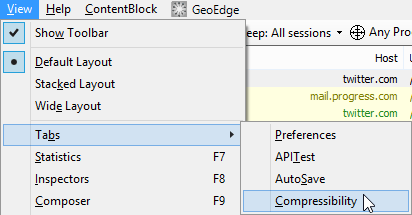

To show the Compressibility tab, simply install the add-on, restart Fiddler, and choose Compressibility from the View > Tabs menu2.

The extension also adds ToWebP Lossless and ToWebP Lossy commands to the ImageView Inspector’s context menu:

I hope you find this new addon useful; please send me your feedback so I can enhance it in future updates!

-Eric

1 Note: Compressibility requires Fiddler 4, because there’s really no good reason to use Fiddler 2 any longer, and Fiddler 4 resolves a number of problems and offers extension developers the ability to utilize newer framework classes.

2 If you love Compressibility so much that you want it to be shown in the list of tabs by default, type prefs set extensions.Compressibility.AlwaysOn true in Fiddler’s QuickExec box and hit enter.