Update: The October 2018 Cumulative Security Update (KB4462919) brings the RS5 Cookie Control changes described below to Windows 10 RS2, RS3, and RS4.

Note: Most of the content about “Edge” in this post describes Edge Legacy– modern Edge is based on Chromium and behaves mostly like Chrome. See more discussion of 3P cookies in 2022’s New Recipes for 3P Cookies.

Cookies are one of the most crucial features in the web platform, and large swaths of the web don’t work properly without them. Unfortunately, cookies are also one of the primary mechanisms that trackers and ad networks utilize to follow users around the web, potentially impacting users’ privacy. To that end, browsers have offered cookie controls for over twenty years.

Back in 2010, I wrote a summary of Internet Explorer’s Cookie Controls. IE’s cookie controls were very granular and quite powerful. The basic settings were augmented with P3P, a once-promising feature that allowed sites to advertise their privacy practices and browsers to automatically enforce users’ preferences against cookies. Unfortunately, major sites created fraudulent P3P statements, regulators failed to act, and the entire (complicated) system collapsed. P3P was removed from IE11 on Windows 10 and never implemented in Microsoft Edge.

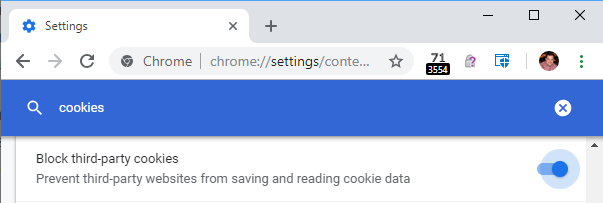

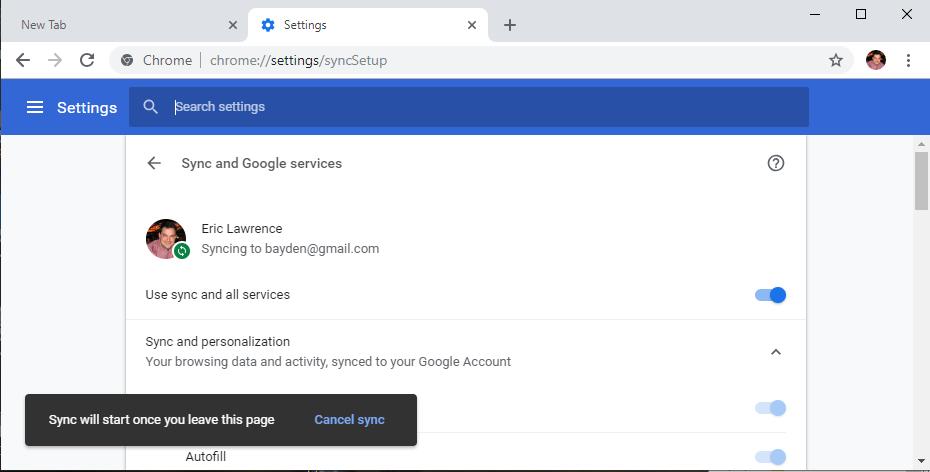

Instead, Edge Legacy offers a very simple cookie control in the Privacy and Security section of the settings. Under the Cookies option, you have three choices: Don’t block cookies (the default), Block all cookies, and Block only third party cookies:

This simple setting hides a bunch of subtlety that this post will explore.

Cookie => Cookie-Like

For the October 2018 update (aka “Redstone Five” aka “RS5”) we’ve made some important changes to Edge Legacy’s Cookie control.

The biggest of the changes is that Edge Legacy now matches other browsers, and uses the cookie controls to restrict cookie-like storage mechanisms, including localStorage, sessionStorage, indexedDB, Cache API, and ServiceWorkers. Each of these features can behave much like a cookie, with a similar potential impact on users’ privacy. (See the “Chromium Audit” section below for more discussion).

While we didn’t change the Edge Legacy UI, it would be accurate to change it to:

This change improves privacy and can even improve site compatibility. During our testing, we were surprised to discover that some website flows fail if the browser blocks only 3rd party cookies without also blocking 3rd-party localStorage. This change brings Edge Legacy in line with other browsers with minor exceptions. For example, in Firefox 62, when 3rd-party site data is blocked, sessionStorage is still permitted in a 3rd-party context. In Edge Legacy RS5 and Chrome, 3rd party sessionStorage is blocked if the user blocks 3rd-party cookies.

Block Setting and Sending

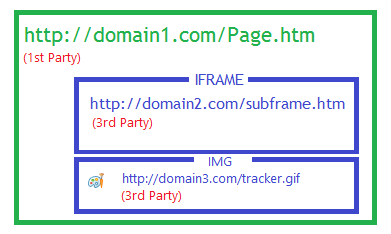

Another subtlety exists because of the ambiguous terminology “third-party cookie.” A cookie is just a cookie– it belongs to a site (eTLD+1). Where the “party” comes into play is the context where the cookie was set and when it is sent.

In the web platform, unless a browser implements restrictions:

- A cookie set in a first-party context will be sent to a first-party context

- A cookie set in a first-party context will be sent to a third-party context

- A cookie set in a third-party context will be sent to a first party context

- A cookie set in a third-party context will be sent to a third-party context

For instance, in this sample page, if the IFRAME and IMG both set a cookie, these cookies are set in a third-party context:

- If the user subsequently visits domain2.com, the cookie set by that 3rd-Party IFRAME will now be sent to the domain2.com server in a 1st-Party context.

- If the user subsequently visits domain3.com, the cookie set by that 3rd-Party IMG will now be sent to the domain3.com server in a 1st-Party context.

Historically, Edge Legacy and IE’s “Block 3rd party cookies” options controlled only whether a cookie could be set from a 3rd party context, but did not impact whether a cookie initially set in a 1st party context would be sent to a 3rd party context.

As of Edge Legacy RS5, setting “Block only 3rd party cookies” will now also block cookies that were set in a 1st party context from being sent in a 3rd-party context. This change is in line with the behavior of other browsers.

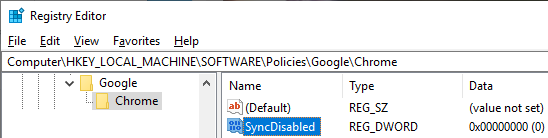

Edge Legacy Controls Impacted By Zones

With the move from Internet Explorer to Edge Legacy, the Windows Security Zones architecture was largely left by the wayside.

However, cookie controls are one of a small number of exceptions to this; Edge Legacy applies the cookie restrictions only in the Internet Zone, the zone almost all sites fall into (outside of users on corporate networks).

Perhaps surprisingly, cookie-like features and the document.cookie getter are restricted, even in the Intranet and Trusted zones.

Chrome and Firefox do not take Windows Security Zones into account when applying cookie policies. Modern Edge, based on Chromium, does not use Zones for this purpose.

Test Cases

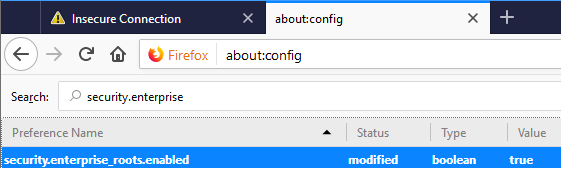

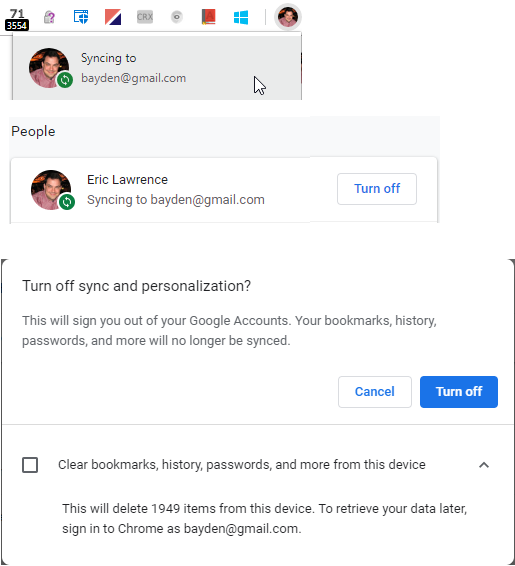

I’ve updated my old “Cookies” test page with new storage test cases. You can set your browser’s privacy controls:

…then visit the test page to see how the browser limits features from 3rd-party contexts. You can use the Swap button on the page to swap 1st-party and 3rd-party contexts to see how restrictions have been applied. You should see that the latest versions of Chrome, Firefox, and Edge Legacy all behave pretty much the same way.

One interesting exception is that when configured to Block 3rd-party Cookies, Edge Legacy still allows 3rd-party contexts to delete their own cookies. (This is used by federated logout pages, for instance). Chrome does not allow deletion in this scenario– the attempt to delete cookies is ignored.

-Eric

Appendix: Chromium Audit

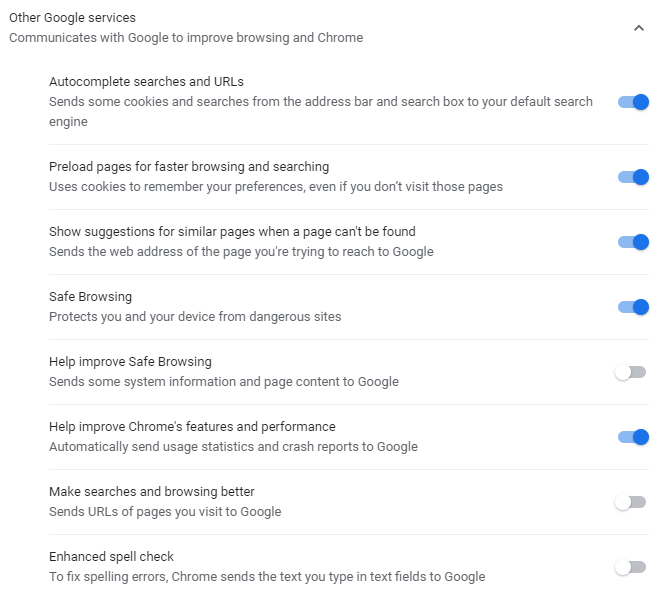

In the course of our site-compatibility investigations, I had a look at Chromium’s behavior with regard to their cookie controls. In Chromium, Blink asks the host application for permission to use various storages, and these chokepoints check methods like IsFullCookieAccessAllowed() which is sensitive to the various “Block Cookies” settings. (Things got much more complicated between 2018 and 2022; see this lengthy comment.)

Mojo messages come up through renderer_host/chrome_render_message_filter.cc, gating access to:

Additionally, ChromeContentBrowserClient gates:

Elsewhere, IsCookieAccessAllowed is used to limit:

- Flash Storage (

PP_FLASHLSORESTRICTIONS_BLOCK) - Client Hints

Of these, Edge Legacy does not support WebSQL, FileSystem, SharedWorker, or Client Hints.

Update: As of Chromium v88, Windows Integrated Authentication (Kerberos/NTLM) is now blocked in third-party contexts if 3P Cookies are blocked.

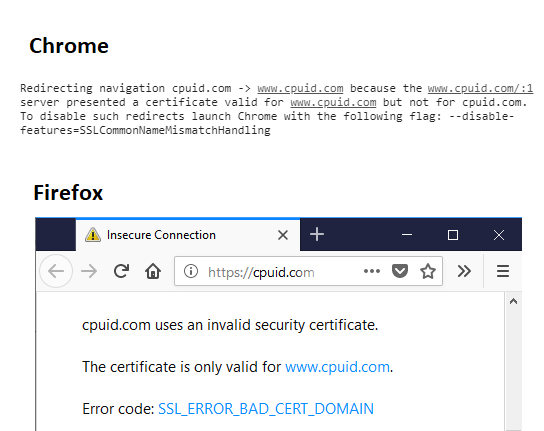

…but I end up on an error page:

…but I end up on an error page: