Back in February, I wrote about browser password managers and mentioned that it’s important to understand the threat model when deciding how to implement features and their security protections.

Generally speaking, “keeping secrets from yourself” is a fool’s errand, so it’s a waste of time and effort to encrypt data if you have to store the decryption key in a place that’s accessible to the attacker. That’s one reason why physically local attacks and machines infected with malware are generally outside the browser’s threat model: if an attacker has access to the keys, using encryption isn’t going to protect your data.

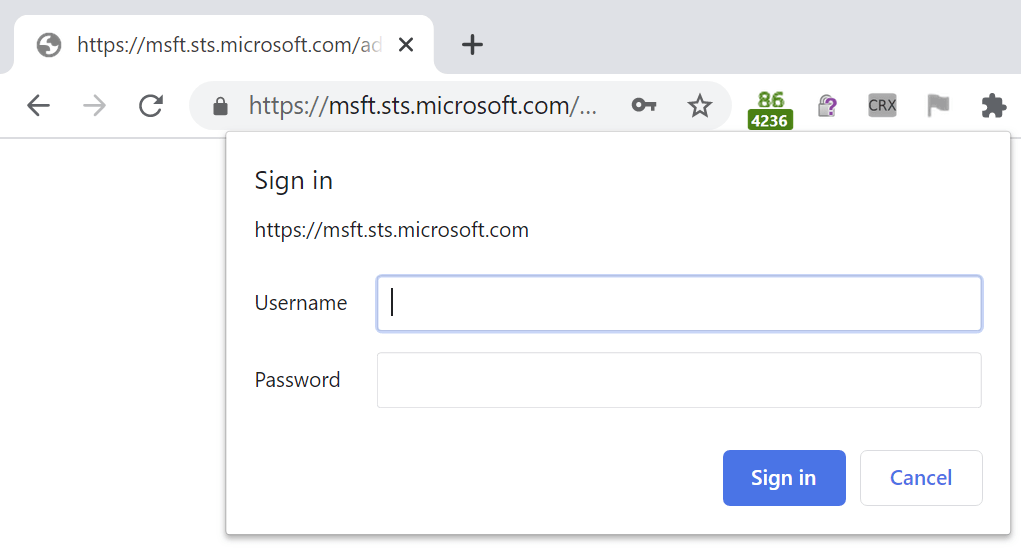

Web browsers store a variety of highly sensitive data, including credit card numbers, passwords and cookies (often containing authentication tokens functionally equivalent to passwords). When storing this extra-sensitive data, Chromium encrypts it using AES256 on Windows (AES128 on Linux/Mac), storing the encryption key in an OS storage area. This feature is called local data encryption. Not all of the browser’s data stores use encryption– for instance, the browser cache does not. If your device is at risk of theft, you should be using your OS’ full-disk encryption feature, e.g. BitLocker on Windows.

The profile’s encryption key is protected by OSCrypt: On Windows, the OS Storage area is a DPAPI-encrypted blob1; on Mac, it’s the Keychain; on Linux, it’s Gnome Keyring or KWallet.

Notably, all of these OS storage areas encrypt the AES key using a key accessible to (some or all2) processes running as the user– this means that if your PC is infected with malware, the bad guys can get decrypted access to the browser’s storage areas.

However, that’s not to say that Local Data Encryption is entirely without value– for instance, I recently came across a misconfigured web server that allowed any visitor to explore the server owner’s profile (e.g. c:\users\sally), including their Chrome profile folder. Because the browser key in the profile is encrypted using a key stored outside of the Chrome profile, their most sensitive data remained encrypted.

Similarly, if a laptop isn’t protected with Full Disk Encryption, Local Data Encryption will make a thief’s life harder.

Tradeoffs?

Okay, so Local Data Encryption might be useful. What are the downsides?

The obvious tradeoff is simple and mild: There’s always a performance cost to encrypting and decrypting data. However, AES is extremely fast (>1GB/sec) on modern hardware, and the data size of cookies and credentials is relatively small.

The bigger risk is complexity: If something goes wrong with either of the keys (the browser’s key that encrypts the data or the OS’s key that encrypts the browser’s key), then the user’s cookie and credential data will be unrecoverable. The user will be forced to re-log into every website and re-store all of the credentials in their password manager (or recover their credentials from the cloud using the browser’s sync feature).

Unfortunately, I seem to be a magnet for such problems.

On Mac, Edge recently had a problem where the browser would fail to get the browser key from the OS keychain. The browser would offer to wipe the keychain (losing all of your data), but ignoring the error message and restarting would typically correct the problem. A fix for that bug was recently issued.

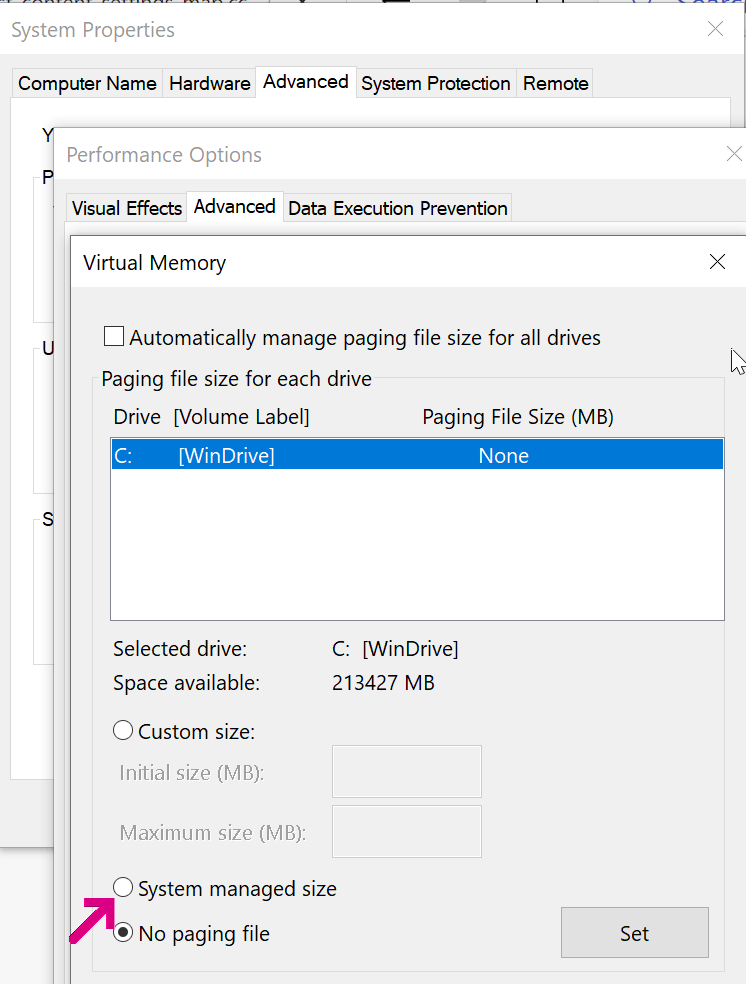

On Windows, DPAPI failures are typically silent– your data disappears with nary a message box.

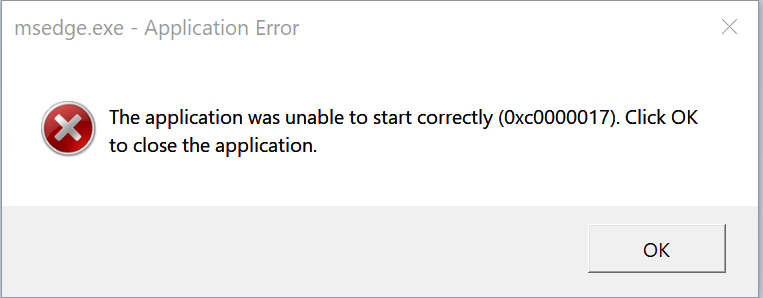

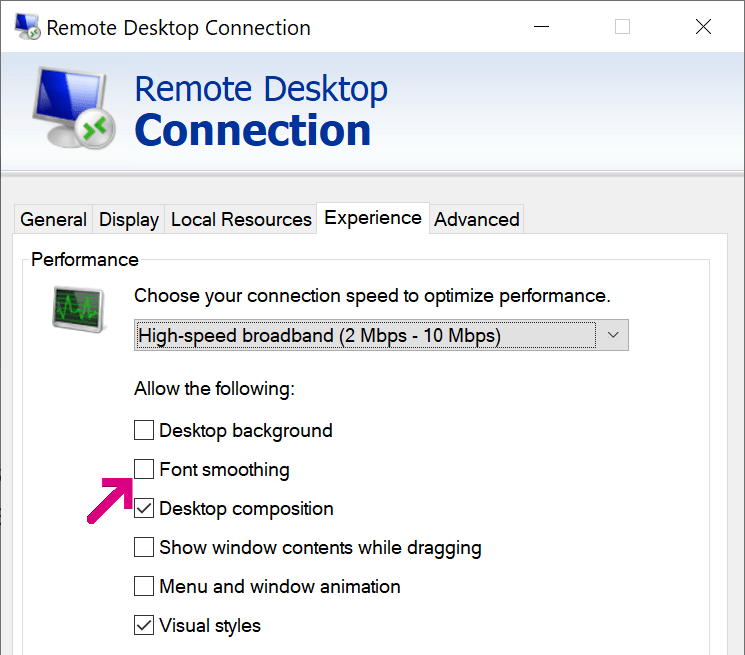

When I first rejoined Microsoft in 2018, a bug in AAD meant that my OS DPAPI key was corrupted, causing Chromium-based browsers to cause lsass to spin a CPU core forever when they launched. Troubleshooting this problem required months of effort.

More recently, we’ve heard from some users on Windows 10 that Edge and Chrome forget their data frequently (and similar effects are seen in other DPAPI-using applications).

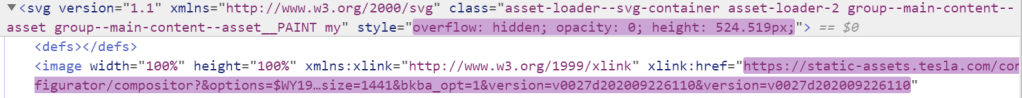

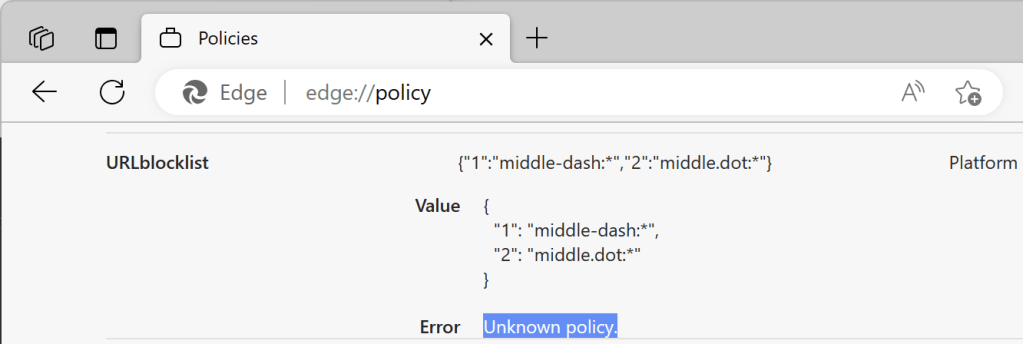

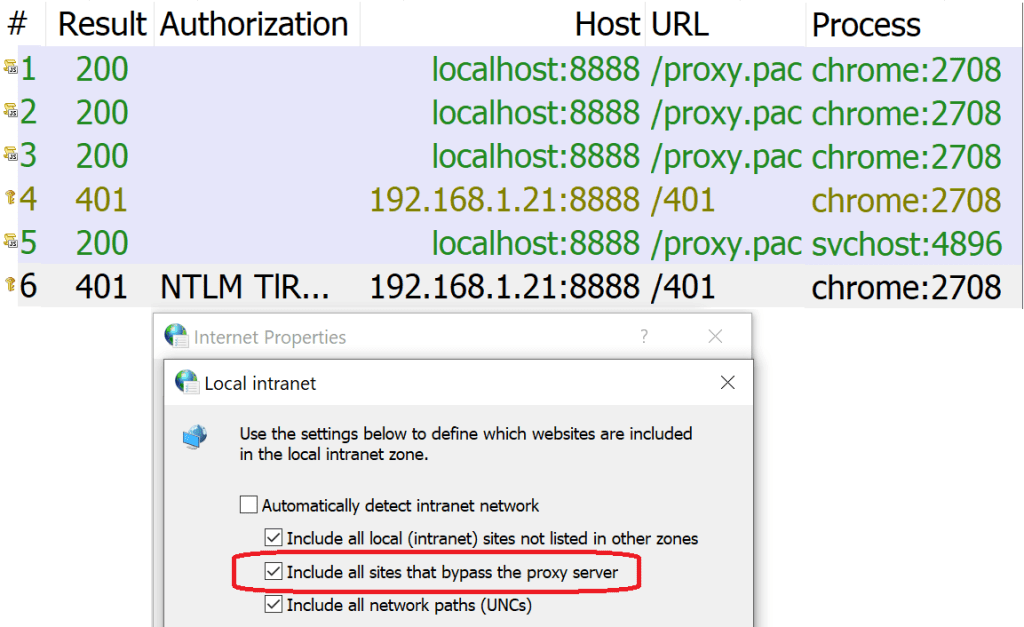

Users in this state who visit chrome://histograms/OSCrypt in Chrome or Edge in the browser session where they first notice their sensitive data has gone missing will see an entry inside OSCrypt.Win.KeyDecryptionError with a value of -2146893813 (NTE_BAD_KEY_STATE), indicating that the OS API was unable to use the currently logged-in user’s credentials to decrypt the browser’s encryption key:

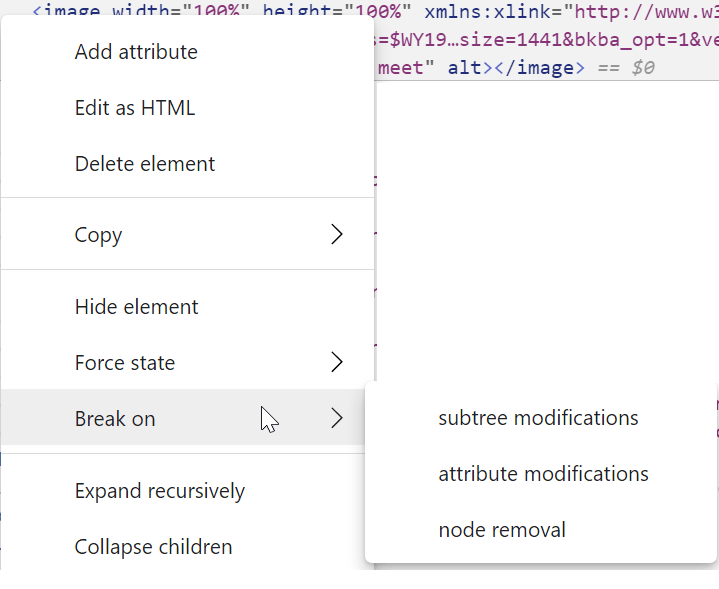

Fortunately (for us, not for him), this problem hit one of the best engineers in the world, and he was able to develop a solid theory of the root cause of the problem. If you find your system in this state, try running the following command in PowerShell:

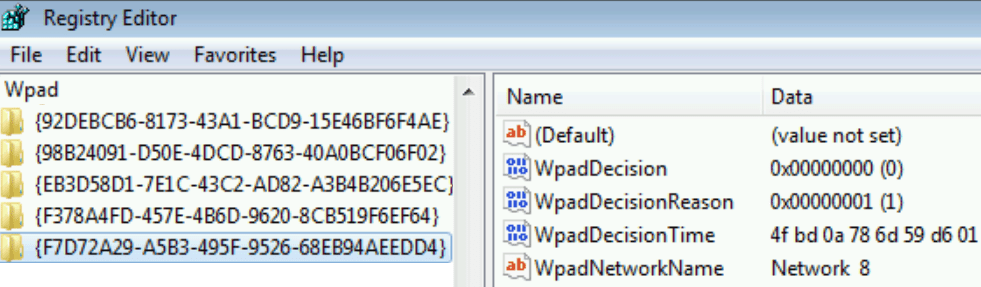

Get-ScheduledTask | foreach { If (([xml](Export-ScheduledTask -TaskName $_.TaskName -TaskPath $_.TaskPath)).GetElementsByTagName("LogonType").'#text' -eq "S4U") { $_.TaskName } }This will list off any scheduled tasks using the S4U feature suspected of causing the incorrect DPAPI credentials:

Update: The S4U bug was fixed for Windows 10 2004 and 20H2 as a part of the February 2021 Windows Updates.

-Eric

1 Windows’ DPAPI itself uses AES256/SHA2 to encrypt the blob for non-Domain user accounts, but defaults to 3DES/SHA1 for Domain accounts. Administrators who do not need legacy compatibility for their domain users may specify algorithm ids in registry keys to choose among RC4/SHA1, 3DES/SHA1 and AES256/SHA512. You can learn more about hacking DPAPI here.

2 The question of which processes can ask the OS to decrypt the browser’s key is a somewhat interesting one. On Windows, Chromium’s use of DPAPI’s CryptProtectData allows any process running as the user to make the request; there’s no attempt to use additional entropy to do “better” encryption, largely because there’s nowhere safe to store that additional entropy. On modern Windows, there are some other mechanisms that might provide somewhat more isolation than raw CryptProtectData, but full-trust malware is always going to be able to find a way to get at the data. Mid-2024 Update: The Chrome team has introduced an AppBound Encryption feature that locks down the storage on Windows more tightly.

On Mac, the Keychain protection restricts access to data such that it’s not accessible to every process running as the user, but this doesn’t mean the data is immune from malware. Malware on Mac must instead use Chrome as a sock-puppet, having it perform all of the data decryption tasks, driving it via extensibility interfaces or other mechanisms.

The overall threat model against local attackers is further complicated by the mechanisms and constraints of process isolation: for instance, attackers can dump the memory of a browser process, or inject threads into that process, enabling malware to steal the data after the browser has decrypted it.

Mobile platforms (iOS/Android) tend to have the strongest story here, with more robust process isolation, code-signing requirements, hardware-backed secure enclaves that require biometrics for release, etc.